Logistic Regression with Stochastic Gradient Descent

Machine Learning

Code to the whole program can be found at the end of the post.

So to start with, Logistic Regression is a discriminative machine learning algorithm.

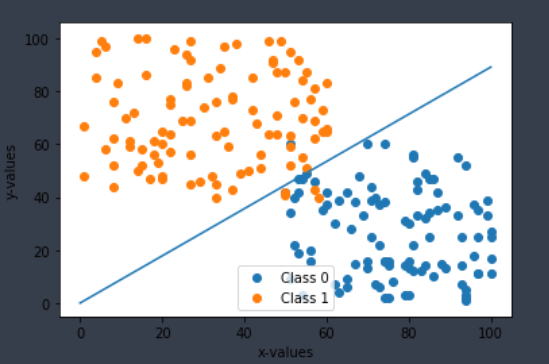

This time I created some artificial data through python library random. The data is a <x,y> pair and also each data point has a label. It looks something like this:

For any given data point X, as per logistic regression, P(C|X) is given by

The parameter ‘w’ is the weight vector. We will be optimizing ‘w’ so that when we calculate P(C = ‘Class1’|x), for any given point x, we should get a value close to either 0 or 1 and hence we can classify the data point accordingly.

We will be using stochastic gradient descent for optimizing the weight vector.

Since we’re using stochastic gradient descent, error would be calculated for a single data point. The formula for error would be :

where, Ypredicted is P(C|X) from logistic regression, Yactual would be 1 if the data point is of Class 1 and 0 if it is of Class 0.

As we have seen earlier, now we will calculate the gradient of the error function w.r.t. ‘w’ since ‘w’ is to be optimized. After which we will obtain:

The following equation would be used to update the weight vector:

the learning rate would be predefined.

Now, as per stochastic gradient, we will only update the weight vector if a point is miss classified. So after calculating the predicted value, we’ll first check if the point is miss classified. If miss classified only then will the weight vectors be updated. You’ll get a better picture seeing the implementation below:

After running 1000 iterations, we get optimized values of ‘w’. The decision line and data looks like:

Now when the system gets a new data point, it calculates P(C|X) with the optimized value of ‘w’ and then classifies it accordingly.

You can find the code for the whole program at:

Link to other related posts: