Gradient Descent in Machine Learning

Hello!

Before, starting with different types of machine learning algorithms, it’s better if we understand how gradient descent works and the maths behind it.

Gradient descent is a way to optimize a function. We will use it to optimize parameters by minimizing error that the program will make during prediction.

The easiest way to explain this is by example. You can find the link to the whole code at the end of the article.

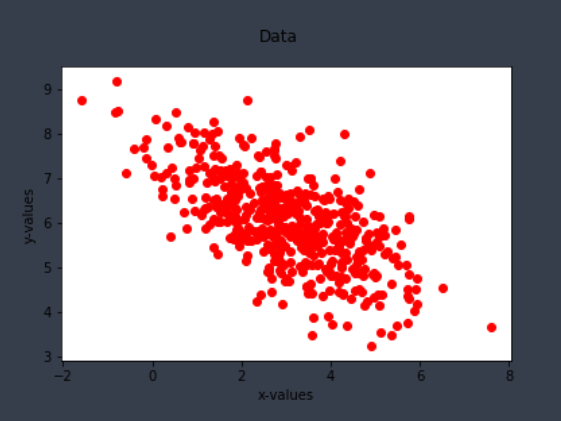

The data is 2 dimensional i.e. <x,y> pair. It looks something like this :

So, our task is to design a program through gradient descent so that, we can obtain a line that passes through most of the data points. Which implies it can predict expected y-value for a given x-value. Since we want to create a line, we start by taking an equation of a general line. That is:

y = mx + c , where, ‘m’ represents the slope of the line and ‘c’ represents the y-intercept of the line.

Now, we have to find the right values of ‘m’ and ‘c’ in the above equation such that line passes through most points. We will use our data set to determine these values. This process will be referred to as training the model.

At the end of training, we would get a function in terms of variable ‘x’ that would be able to map the given x-value to a predicted y-value.

So to start, we set ‘m’ and ‘c’ to some arbitrary value and then update them.

Now, coming to the error that we have to minimize. We will calculate mean squared error. The formula to be used would be:

where, Ypredicted(i) is the value we get by substituting the x-value of data into the line’s equation, Yactual(i) is the y-value of data.

Since the error function is proportional to the square of difference of both the values, the positive and negative values don’t get cancelled out.

As per gradient descent, to optimize ‘m’ and ‘c’ we will have to take partial derivative of the error function w.r.t. ‘m’ and ‘c’. Which will take us to these formulas:

Now, all we have to do is update the values of ‘m’ and ‘c’ till the time we can obtain the minimum value of error. We will be updating values of ‘m’ and ‘c’ by using the following formula. I suggest you to keep this formula in mind for future use as well.

where, eeta is the learning rate which would be predefined.

I had initialized values of ‘m’ and ‘c’ equal to 1 and eeta equal to 0.01. At start we got this plot with the initial ‘m’ and ‘c’ values:

After implementing gradient descent for 1000 iterations through the following code, we get optimized values of ‘m’ and ‘c’.

We get ‘m’ equal to -0.40 and ‘c’ equal to 7.20. After using the optimized values of ‘m’ and ‘c’, we get the following plot:

I also made a plot for visualizing the evolution of error with every iteration. The thing to observe is that the error decreases with every iteration. This is because the values of ‘m’ and ‘c’ keep getting updated in every iteration to minimize error.

Before ending I’d like to introduce you to the two types of gradient descents:

Batch Gradient Descent: While applying batch gradient descent, the value of parameters (‘m’ and ‘c’ in this case) would be updated once after traversing the whole data set. That implies, if you traverse the whole data set 10 times, the values would be updated 10 times only.

Stochastic Gradient Descent: While applying stochastic gradient descent, the values of parameters (‘m’ and ‘c’ in this case) are updated after taking feedback from every data point. This implies that if you’ll traverse a data set of 200 data points 2 times, the values would be updated 400 times.

I have used batch gradient descent for today’s post, since I updated the values of ‘m’ and ‘c’ after calculating error for the whole data set.

A quick recap! We started with trying to figure out gradient descent in order to optimize parameters for minimizing error. Gradient descent is used in regression (we will discuss about this in future), since at the end, we get a function that can predict continuous values. Going through the steps, we calculated gradient error w.r.t. every parameter to be optimized and then optimized them. We also discussed about batch and stochastic gradient descent, batch was implemented in this post, stochastic would be implemented in the next one.

The code for the whole program can be found here: